Cybersecurity & ChatGPT - Part 2 - Generative AI for Blue Teams

Photo from Pixabay

Photo from Pixabay

In the last post of the series Cybersecurity & ChatGPT, we talked about the use of Generative AI (GenAI) technologies in offensive and defensive cybersecurity operations. In this new post, we will dig deeper into how blue teams can leverage the power of ChatGPT and similar Large Language Models (LLMs) to enhance their skills & capabilities in Threat Hunting, Digital Forensics or Incident Response. In this journey, we explore the principal use cases of LLMs in defensive cybersecurity through several hands-on examples. We encourage you to actively participate by doing the exercises we propose at the same time as we do. So, take your favorite LLM (ChatGPT, Gemini, Llama-2, etc.) and let’s learn by doing. Also, we invite you to vary the prompts we propose since different LLM queries can bring many different responses. We will cover this topic in a future blog post about prompt engineering but, for now, let's play and experiment with different queries and compare the results. You will be surprised!

🚀 Empowering Cybersecurity Blue Teams: Unleashing the Potential of ChatGPT 🤖

As defenders of digital fortresses, blue teams face an overwhelming volume of data, rapidly evolving threats, and the constant pressure to stay one step ahead of adversaries. Traditional security measures, while essential, can be augmented by integrating advanced Generative AI technologies. From threat intelligence analysis to incident response, LLMs like ChatGPT offer a versatile set of capabilities that can enhance the efficiency and efficacy of blue team operations.

This blog post explores the multifaceted uses of ChatGPT in cybersecurity, shedding light on how this advanced language models can enhance threat detection, incident response, and overall resilience for blue teams in the dynamic and complex cybersecurity landscape. Join us on a journey into ChatGPT, where human ingenuity meets AI to fortify the defenses of modern cybersecurity operations. Let's start!

ChatGPT is a valuable asset for cybersecurity blue teams due to its ability to analyze and contextualize vast amounts of textual data. Its natural language processing capabilities enable efficient parsing and comprehension of security alerts, logs, and incident reports, facilitating quicker identification of potential security threats. This technology helps both novel and expert cybersecurity players. First, ChatGPT is a virtual assistant that can help new professionals perform advanced tasks and accelerate the learning curve of new tools and technologies. On the other hand, ChatGPT is a valuable asset for more experienced professionals. ChatGPT can automate routine tasks such as log analysis, freeing up human resources for more complex and strategic tasks. Overall, the integration of ChatGPT empowers cybersecurity blue teams with enhanced information processing, communication, and task automation capabilities, contributing to a more robust and responsive defense strategy.

In the following sections, we are going to explore how ChatGPT can help security teams by solving the following tasks:

🔵 Grab your blue cap, it's time to act! 🛠️

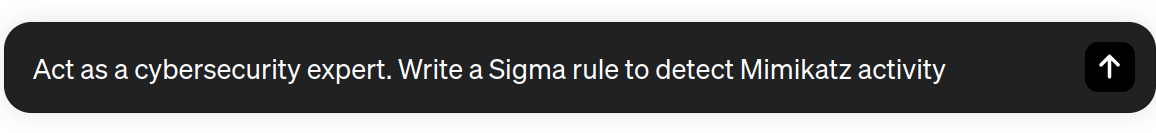

Sigma Rule Generation

Sigma is an open-source, generic signature format used in cybersecurity for creating and sharing threat detection methods across SIEM (Security Information and Event Management) systems. It allows cybersecurity teams to define and describe log events, facilitating the detection and response of potential security threats. Since ChatGPT was trained on a large amount of public data like the GitHub Sigma repository, which contains thousands of Sigma rule examples, the model can write high-quality rules. Let's put it to the test!

- yaml

id: detect_mimikatz_activity description: Detects activity related to Mimikatz credential dumping author: Your Name date: 2023-11-22 status: experimental logsource: product: windows service: security detection: selection: EventID: 4673 EventID: 4688 condition: "'*\\mimikatz.exe' in commandline or '*\\sekurlsa.dll' in commandline or '*\\lsadump.dll' in commandline" level: high tags: - attack.credential_access - attack.t1003 falsepositives: - Legitimate use of tools similar to Mimikatz - Scheduled tasks or scripts

As you can see, without any explicit order, ChatGPT has related Mimikatz detention with two Windows Security Event Log events: EventID 4673 for privileged use and 4688 for process creation. It also checks if the command line of these events contains references to the Mimikatz executable (mimikatz.exe) or its associated DLLs (sekurlsa.dll and lsadump.dll). Nothing bad!

A good strategy when generating content with LLMs is to regenerate the response. Due to its stochastic component (temperature parameter), we could get different answers for the same query. For example, when we regenerate the response, ChatGPT creates a new Sigma rule that includes common keywords related to Mimikatz commands and outputs ['LSADump', 'wdigest', 'sekurlsa', 'msv', 'tspkg', 'kerberos', 'Authentication Packages', 'Authentication Ticket Request', 'Security Account Manager']. In order to create the best solution for our task, we can use a mix of rules or ask ChatGPT to do it for us.

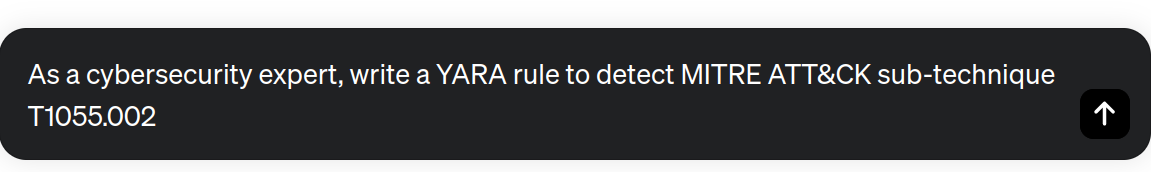

Writing YARA Rules

Writing YARA rules is another of the most widespread uses of ChatGPT among the cybersecurity community. YARA rules are essential in identifying and classifying malware based on specific patterns or characteristics. Integrating Generative AI technologies into the rule-generation process can enhance the efficiency and effectiveness of this task, providing teams lots of benefits.

First, ChatGPT can assist cybersecurity teams in understanding and articulating complex malware patterns in a human-readable manner. This technology can significantly reduce the learning curve for creating YARA rules, making it more accessible to a broader range of security professionals, including those without deep expertise in malware analysis. Moreover, ChatGPT can speed up the rule-generation process by offering suggestions and automating certain aspects of the task. For example, it can generate a YARA rule to detect a malware at scale from code snippets of the malware.

Another advantage is the potential for creativity and adaptability of ChatGPT. The model can propose novel approaches for creation, drawing on a vast amount of information and patterns it has encountered during training. This fact is helpful when dealing with unseen or evolving threats to provide ideas to cybersecurity professionals to stay ahead of the curve by adapting their rules to new attack vectors.

Now, let's use ChatGPT to generate a YARA rule to detect one common adversary technique of the MITRE ATT&CK® framework.

- yara

rule Detect_Process_Injection { meta: description = "Detects process injection (T1055.002)" author = "Your Name" reference = "MITRE ATT&CK T1055.002" strings: $pattern1 = "WriteProcessMemory" $pattern2 = "CreateRemoteThread" $pattern3 = "VirtualAllocEx" $pattern4 = "VirtualAlloc" $pattern5 = "NtMapViewOfSection" $pattern6 = "NtCreateSection" condition: 2 of ($pattern*) }

We see that ChatGPT associate the sub-technique T1055.002 with process injection. To hunt this kind of attack, it searches for the presence of specific function names or API calls related to the technique like “WriteProcessMemory,” “CreateRemoteThread”, “VirtualAllocEx”, “VirtualAlloc”, “NtMapViewOfSection”, and “NtCreateSection”.

It is worth mentioning that not all LLMs can generate accurate YARA rules. Small LLMs have difficulties for programming or performing complex tasks due to limited context understanding, insufficient training data, and limited context windows. For example, the well-known open-source LLM family Llama-2 has difficulty for generating YARA rules. We invite you to run the prompt on different LLMs and compare the outputs. For instance, you can use the different versions of the Llama-2 model, from its entry model with 7 billion parameters (Llama-2-7B) to the biggest one with 70 billion (Llama-2-70B). These modes are available in Huggingface for free. You will check that models like Gemini or ChatGPT achieve superior performance for building complex data structures like YARA rules or coding.

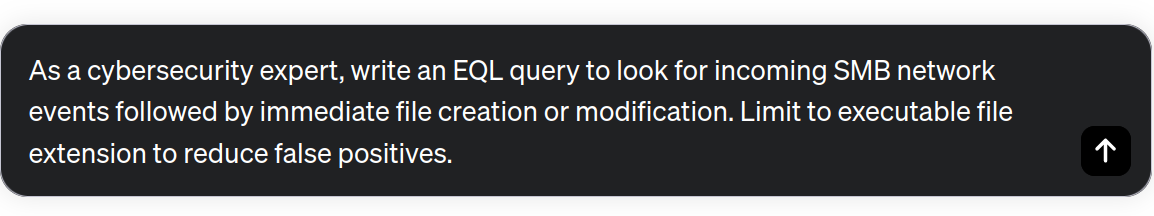

Write SIEM/EDR Queries

By leveraging ChatGPT's natural language processing capabilities, cybersecurity professionals can formulate SIEM or EDR queries more intuitively and naturally. This feature is especially beneficial for users who may not be familiar with a particular query syntax. By providing prompts in plain text and receiving detailed and contextually relevant queries in return, professionals can improve the quality of the query-design process. With LLMs, the time spent on crafting queries is significantly reduced. These models can quickly generate advanced queries based on natural language input, streamlining the query creation process and enabling security teams to allocate their time and resources more efficiently.

In the following example, we generate a query for Elastic, a known SIEM in the market.

sequence

[ network where

event.type == "network"

and (network.protocol == "smb" or network.port == 445)

]

[ file where

event.type == "file"

and file.action in ["created", "modified"]

and file.extension in ["exe", "dll", "sys", "bat", "com"]

and file.parent.path == network.destination.ip

]

While the above query might not be perfect and could need some fine-tuning, it serves as an excellent starting point towards achieving our ultimate objective. It's essential to acknowledge that ChatGPT and comparable LLMs were trained using public internet data. Thus, ChatGPT is familiar with various versions of query languages and programming codes. Consequently, it's important to be mindful that ChatGPT may occasionally make errors and generate output queries that blend different versions of the query languages.

Find Code Vulnerabilities

Another of the fantastic uses of Generative AI is the use of LLMs to find vulnerabilities in code. LLMs like ChatGPT or Gemini can understand programming concepts and generate code in many languages.

One way to leverage ChatGPT for identifying vulnerabilities is by engaging in a conversation with the model about specific code or programming practices. Users can describe the code they're working on or provide snippets of code. Then, the user can ask for advice or potential security concerns about the project. The model can analyze the information provided and offer insights based on its understanding of programming logic and common security best practices. ChatGPT can highlight potential weaknesses and guide developers toward more secure coding practices, suggesting safer code snippets.

To test this functionality, we provide ChatGPT with a code vulnerable to an SQL injection attack and request an analysis of the code.

User:

- python

import sqlite3 # Connect to the SQLite database (create a new one if it doesn't exist) conn = sqlite3.connect('example.db') # Create a cursor object to interact with the database cursor = conn.cursor() # Unsafe way: Concatenate user input directly into the SQL query (vulnerable to SQL injection) user_input = input("Enter a name: ") query = "SELECT * FROM users WHERE name = '" + user_input + "'" # Execute the query cursor.execute(query) # Fetch and print the results results = cursor.fetchall() for row in results: print(row) # Close the cursor and connection cursor.close() conn.close()

ChatGPT:

- python

import sqlite3 # Connect to the SQLite database (create a new one if it doesn't exist) conn = sqlite3.connect('example.db') # Create a cursor object to interact with the database cursor = conn.cursor() # Safe way: Use parameterized statements to prevent SQL injection user_input = input("Enter a name: ") query = "SELECT * FROM users WHERE name = ?" # Execute the query with a tuple containing the user input cursor.execute(query, (user_input,)) # Fetch and print the results results = cursor.fetchall() for row in results: print(row) # Close the cursor and connection cursor.close() conn.close()

In our example, ChatGPT advises us to use parameterized queries to prevent SQL injection attacks or encourage the adoption of secure coding standards like OWASP (Open Web Application Security Project) guidelines. The model finally rewrites the code to protect it against SQL injection attacks.

In addition to SQL injection, ChatGPT can help to identify software vulnerabilities like:

- DDoS attacks

- XSS testing

- Code Bugs (buffer overflow, infinite loops, etc.)

- CSRF attacks

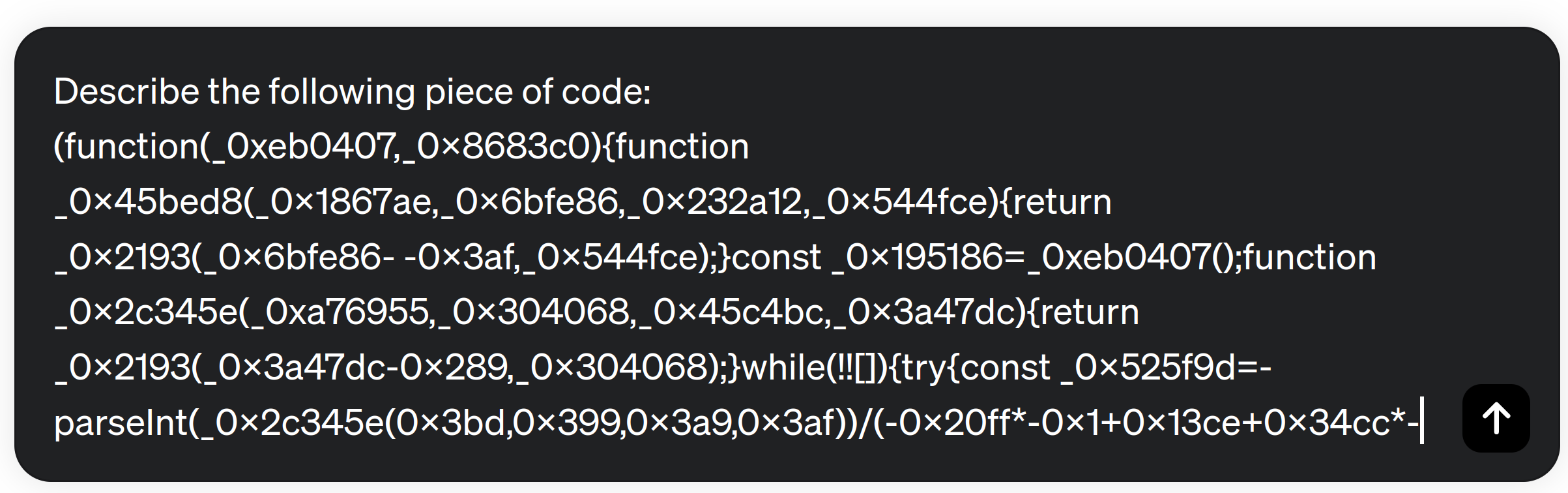

Code De-obfuscation

LLMs exhibit remarkable capabilities in analyzing and de-obfuscating code. The models can navigate through complex lines of code, understand programming languages, and unveil obscured logic. LLMs excel at deciphering the intent behind obfuscated code, making them valuable tools for cybersecurity professionals.

To test the de-obfuscation code capabilities of ChatGPT, we introduce a JavaScript snippet with a high level of obfuscation. We have chosen a code that computes the Fibonacci series.

Original Code:

- JavaScript

let n1 = 0, n2 = 1, nextTerm; for (let i = 1; i <= number; i++) { console.log(n1); nextTerm = n1 + n2; n1 = n2; n2 = nextTerm; }

ChatGPT immediately describes the main features of the code. For example, it detects that it is a recursive code to compute certain math operations. Finally, it detects that the code calculates the Fibonacci series: “Loop for Fibonacci sequence calculation”. Then, the model provides the following code de-obfuscated and a brief explanation of it: “This part of the code calculates and prints the Fibonacci sequence up to a specified limit (number). The initial values (n1 and n2) are set, and the loop iterates to calculate and print the next term in the sequence”.

De-obfuscated Code:

- JavaScript

let n1 = 0x18b5; // initial values let n2 = -0x164f; let nextTerm; for (let i = 0; i <= number; i++) { console.log(n1); nextTerm = n1 + n2; n1 = n2; n2 = nextTerm; }

Malicious URL Classification & Command Line Analysis

While ChatGPT isn't explicitly crafted for URL classification, it can be employed alongside additional tools and approaches to identify malicious URLs and websites. Cybersecurity analysts can use LLMs as a Threat Intelligence source to check if a URL has patterns indicative of malicious intents. For this type of task, it is advisable to use LLMs connected to the internet, such as ChatGPT-4 or Gemini. These models can access up-to-date information about domains and websites and are not limited to training data.

LLMs can extract relevant features from the URLs. These features may include the length of the URL, the presence of suspicious characters of patterns, domain and subdomain analysis, and URL structure and path analysis. Based on [2], we are going to analyze the URL hxxp://caseld-10xxxx.info/1/Office365/0fflce3.6.5/live/login.php with ChatGPT. In this example, instead of using ChatGPT from its web GUI, we prompt ChatGPT through its API. With this approach, we could use this model as part of a complete system to detect malicious URLs. To parse the output of the model successfully, we will instruct ChatGPT to return the information in JSON format as follows.

User Prompt:

- json

{"role": "system", "content": "You are CyberGPT, a guru cyber security assistant AI."}, {"role": "user", "content": 'Does this link lead to a phish website? Answer in the json format {"phish": "yes|no", "reasoning": "detailed explanation why", "target_company": "possible targeted company" } Link: http://caseld-10xxxx.info/1/Office365/0fflce3.6.5/live/login.php }

ChatGPT Response:

- json

{ "phish": "yes", "reasoning": "The provided link (http://caseld-10xxxx.info/1/Office365/0fflce3.6.5/live/login.php) exhibits characteristics commonly associated with phishing attempts. The domain 'caseld-10xxxx.info' appears suspicious and does not align with official Microsoft or Office 365 domains. Additionally, the path 'live/login.php' in the URL is indicative of an attempt to mimic a login page, which is a common tactic used by phishing sites to harvest credentials. Exercise caution and avoid interacting with this link.", "target_company": "Microsoft Office 365" }

As we can observe, ChatGPT analyzes the provided URL, identifying several suspicious patterns and possible malicious intents. In addition to use LLMs to detect malicious URLs, researchers have used simpler fine-tuned LLMs or pre-trained Transformers like BERT to classify URLs with greater precision. With fine-tuning, works like [3] propose a framework to detect malicious URL. Authors use Convolutional Neural Networks (CNN) to learn non-linear character embedding features of URLs. On the other hand, in [4], authors train a Transformer model (BERT) to analyze URL strings and detect suspicious patterns. The model uses self-attention mechanism to analyze the relation among URL sub-strings and classify malitious URLs. This kind of approach is not limited to URL classification. Cybersecurity teams are using similar models to analyze system processes or detect malicious command-line executions. For example, CrowdStrike Falcon® platform uses a model to learn command line instructions with BERT. Then, they use the command line embbedings to detect outliers with different anomaly detection models like PCA, COPOD, Auto-Encoders, or iFORES [5]. Previously, Sophos's research team released a Command Analyzer framework that describes a command line in natural language [6]. The model interfaces with ChatGPT and describe complex command line execution and its arguments. The following output is an example provided by the authors of the project.

Input:

command = "C:\\WINDOWS\\system32\\cmd.exe /Q /c echo dir \"C:\\Users\\admin\\OneDrive ADMINISTRATORS INC\" ^> \\\\127.0.0.1\\C$\\__output 2^>^&1 > C:\\WINDOWS\\TEMP\\execute.bat & C:\\WINDOWS\\system32\\cmd.exe /Q /c C:\\WINDOWS\\TEMP\\execute.bat & del C:\\WINDOWS\\TEMP\\execute.bat" tags = "win_local_system_owner_account_discovery"

Output:

The command will echo the “dir” command to a file called “execute.bat”, write the command to the “execute.bat” file, and then execute the “execute.bat” file. This command will list the contents of the directory “C:\Users\admin\OneDrive ADMINISTRATORS INC” and write the output to “\\127.0.0.1\C$\output”. The “dir” command will be executed as the “Local System” account. >baseline_description: >The command will list the contents of the “C:\Users\admin\OneDrive ADMINISTRATORS INC” directory and save the output to “C:\output”. It will be executed as the LocalSystem account.

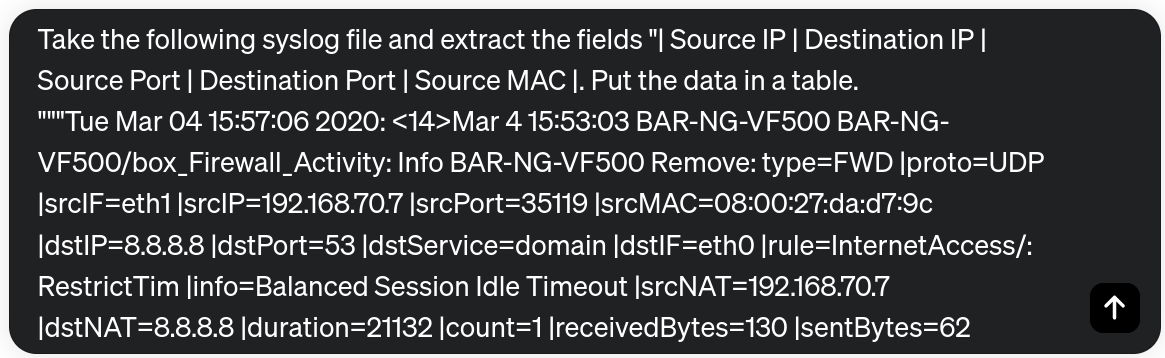

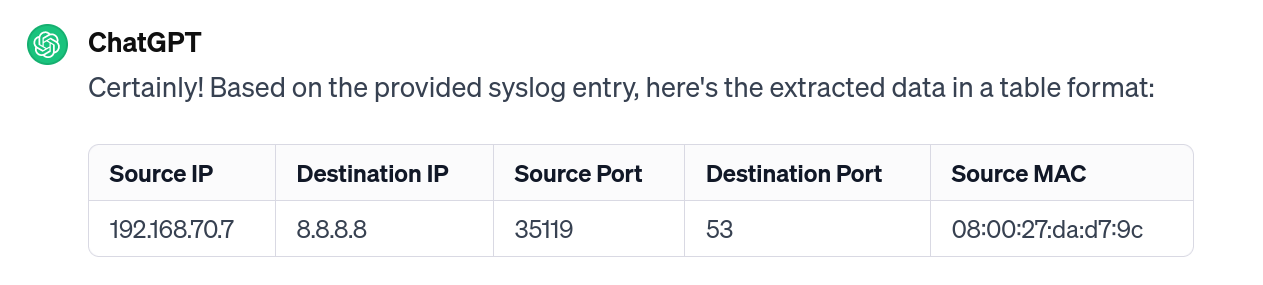

Parsing Unstructured Logs & Write Reports

ChatGPT can be a valuable tool for analyzing and parsing unstructured logs. ChatGPT can help create or refine regular expressions and parsing rules to extract relevant information from unstructured text-based data. In addition, cybersecurity analysts can interact with ChatGPT to formulate complex queries for log data analysis in plain text. These features make LLMs a perfect combination of SIEM or EDR tools to allow cybersecurity teams to extract key information from logs, independently of the source or the format.

In the following example, we use ChatGPT to extract some features from a raw SYSLOG file.

We observe how ChatGPT understand the semantics of the log and extract the fields we request, even those with another name. Note that we have requested “Destination IP”, but this field corresponds to “srcIP” in our record.

Although these models present promising characteristics in cybersecurity, there are some considerations and limitations to take into account. One of these considerations is the privacy and the anonymization of the data. If we use online models, such as ChatGPT or Gemini, our data is exposed to third party companies. To protect our data we could use open-source LLMs such as Llama-2 on private servers. However, the performance of these models is lower. Another workaround could be to anonymize our data before sending it to the model with systems like the one proposed in [7]. However, these systems are not mature and could fail in applications with raw data in different formats, opening the door to possible data leaks.

Another crucial consideration is the maximum input size constraint imposed by the LLM. Typically, raw log files are rich in fields and information, and at times, processing multiple logs is necessary to address specific tasks. LLMs are restricted by a maximum token limit per prompt, with a single word often being divided into several tokens. For instance, the preceding paragraph contains 157 words but comprises 200 tokens. Consequently, there is a potential challenge when the logs intended for the LLM may exceed the prompt's capacity. Notably, ChatGPT-3.5 has a maximum input size of 4k tokens, while models like ChatGPT-4 can process up to 8k/32k input tokens. Therefore, when using LLMs to analyze large volumes of data, we will need to pre-process the data to fit the maximum prompt size.

Conversely, security teams face challenges when utilizing LLMs to generate cybersecurity reports due to constraints in prompt input and output size. However, there have been noteworthy developments in LLMs that address these limitations, allowing for enhanced input and output length capabilities. Models like Claude 2.1 [8] boasts the ability to handle 200k input tokens. It means that we can analyze a document with 470 pages. Also, OpenAI is expanding the context windows size of its new models like gpt-4-turbo, with context windows of 128k tokens.

Training & Cyber Threat Intelligence

ChatGPT provides significant benefits for CTI (Cyber Threat Intelligence) teams. One of the primary advantages is the rapid and efficient analysis of vast amounts of textual data. Among many other tasks, CTI teams analyze many data sources such as forums, social media, and articles searching for new threat actor tactics and techniques. LLMs text processing capabilities enable the teams to quickly sift through large volumes of unstructured data, identifying relevant information and extracting key insights. Also, LLMs can help analysts in tasks like the creation of tailored Threat Landscapes or crafting hypotheses.

In the following example, we use ChatGPT as a knowledge repository to stay ahead of the latest threats, vulnerabilities, and mitigation strategies. We ask the model for some best practices to be protected against the use of PowerShell to execute malicious code (MITRE ATT&CK sub-technique T1059.001).

Immediately, ChatGPT lists the following 10 security best practices to mitigate the risk associated with PowerShell-based attacks.

| Action | Description |

|---|---|

| Application Whitelisting | Use application whitelisting to allow only authorized PowerShell scripts and binaries to run on endpoints. This helps prevent the execution of malicious PowerShell scripts. |

| Least Privilege Principle | Apply the principle of least privilege to limit user and system accounts to only the permissions and access they need to perform their tasks. Avoid using accounts with administrative privileges for routine tasks. |

| Script Block Logging | Enable PowerShell script block logging to record all PowerShell commands and scripts executed on a system. This helps in identifying and investigating potentially malicious activities. |

| Audit PowerShell Activity | Regularly review and analyze PowerShell logs for suspicious activities. Establish baselines for normal behavior and promptly investigate any deviations. |

| Monitoring and Logging | Set up comprehensive logging for command and script executions. Monitor these logs for suspicious activities, and establish alerts for potential security incidents. |

| Disable PowerShell in Unnecessary Environments | In environments where PowerShell is not necessary for regular operations, consider disabling or restricting its use entirely. |

| Network Segmentation | Implement network segmentation to limit lateral movement in case of a successful compromise. Restrict communication channels and permissions between different segments of the network. |

| Endpoint Protection | Use endpoint protection solutions, including antivirus and anti-malware tools, to detect and prevent the execution of malicious scripts or commands. |

| Behavioral Analysis | Implement behavioral analysis tools that can detect unusual or malicious behavior on your network. This can include abnormal command executions or patterns. |

| User Training and Awareness | Educate users about social engineering tactics and the dangers of executing commands or scripts from untrusted sources. Promote a culture of security awareness within your organization. |

Several companies, such as Recorded Future, SOCRadar, and Anomali, leverage ChatGPT for cyber threat intelligence. Recorded Future utilizes AI to power internet searches for cyber intelligence, employing ChatGPT to answer queries about specific threats affecting sectors like healthcare, classify vulnerabilities by risk, and provide the latest information on particular vulnerabilities and affected products. SOCRadar integrates ChatGPT into its Threat Intelligence product, combining modules for Cyber Threat Intelligence, Brand Protection, External Attack Surface Management, and Dark Web Radar to enhance overall security posture. Anomali, with its SOC platform, employs ChatGPT for Unified Natural Language Queries (LLM) and NLP Threat Intelligence features, including a web browser extension for analyzing web pages and documents to bolster threat analysis capabilities.

ChatGPT, also can be a helpful resource in reducing the learning curve of cybersecurity tools for novel cybersecurity profiles. Users can ask questions about specific tools, their functionalities, and how to use them effectively. Users can also seek assistance from ChatGPT when they encounter issues or errors while using cybersecurity tools. By providing details about the problem, users can receive guidance on potential solutions, debugging techniques, and best practices.

🤖 Final thoughts and Future Directions ✈️

While ChatGPT offers remarkable capabilities in natural language understanding and generation, its integration into cybersecurity operations and automation raises significant concerns and risks. ChatGPT lacks a deep understanding of context and may provide inaccurate or incomplete responses. Additionally, the model's reliance on pre-existing data may perpetuate biases present in the training data, introducing discrimination or unintended consequences in cybersecurity decision-making. The black-box nature of the model poses challenges in comprehending its outputs, making it difficult to troubleshoot and validate the security of automated actions. Furthermore, as previously mentioned, safeguarding data privacy remains a significant concern when employing cloud models in security operations.

As we have learnt in this post, ChatGPT can assist successfully in threat intelligence analysis, anomaly detection, or incident response tasks. However, it should be used always in conjunction with established cybersecurity practices and tools. Emphasizing human oversight and expertise is paramount, as it may not always have the most up-to-date information or context-specific knowledge. Users shall always verify information obtained from ChatGPT with authoritative sources, and be aware that the model might produce inaccurate or biased content. Additionally, users should prioritize the security and privacy of data when interacting with ChatGPT, implementing robust access controls and encryption mechanisms to safeguard sensitive information.

The integration of ChatGPT into cybersecurity blue teams marks a significant step forward in the endless quest for digital defense. By harnessing the power of natural language processing, GenAI will change the way forensicators and hunter works today. Under this scenario, the partnership between human expertise and AI-driven models becomes not just a necessity but a force multiplier. In the dynamic realm of cybersecurity, where adaptability is a must, ChatGPT emerges as a valuable ally, offering insights, analysis, and collaboration that redefine the boundaries of cyber-defense. As blue teams continue to face increasingly sophisticated adversaries, the fusion of human intellect and AI exemplifies the collaborative future of cybersecurity, where resilience is not just a goal but a shared journey towards a safer digital world.

In the next post of the series, we will dig into the use of ChatGPT for Red Teams, so stay tuned and keep an eye on our latest news!!

- Cybersecurity & ChatGPT - Part 2 - Generative AI for Blue Teams 👈🏼

Hope you enjoy this content!

Stay Tuned and contact us if you have any comment or question!

References:

- [3] https://arxiv.org/abs/1802.03162 (URLNet)

- [4] https://www.mdpi.com/1424-8220/23/20/8499 (BERT-Based Approaches to Identifying Malicious URLs )

- [8] https://www.anthropic.com/index/claude-2-1 (Anthropic)

Follow us: Twitter: @ds4n6_io - Youtube