Threat Hunting with ML - Part 6 - Detecting the Solarwinds Malicious Scheduled Task with a LSTM Autoencoder

| [14/06/21] June 14, 2021 Jess Garcia - One eSecurity Twitter: j3ssgarcia - LinkedIn: garciajess |

[ Full blog post series available here ]

Photo by Jeffrey Brandjes on Unsplash

In the previous blog post in this series we showed how an Autoencoder was not very effective at detecting the malicious Solarwinds scheduled task via event log or file system analysis. But we then defined a methodology (Artifact Pivoting) which, by filtering the second dataset (file system entries) by the top 25% of the first dataset (event logs), allowed us an overall better success rate (top 100 out of 6.593 file system entries).

The question is now: can we come up with a better technique to detect the malicious scheduled task?

In this post we will describe how using an Autoencoder, and incorporating the time variable to our analysis via a Machine Learning architecture which supports it (LSTM), we will get much better results.

Our Dataset

For this blog post we will use the scheduled tasks event logs only:

- 30 days of real world production server Task Scheduler event logs for 100 servers → 224.206 event log entries

We will therefore not use the file system metadata for the Scheduled Tasks XML files, as we did in the previous post.

Also, in the analysis technique presented in this blog post we will not collapse them to “unique” entries as we did in previous blog posts, we will present each and every event to our Neural Network.

The Time Dimension, Recurrent Neural Networks and the LSTM Architecture

As you may remember, in the previous parts we mentioned that our analysis would be performed on a set of unique event log entries. This means that we do not take into account that many scheduled tasks events are repetitive by nature and therefore they will be appearing over and over again in the event logs. We do not consider either that the fact that a specific scheduled task name (and other characteristics) repeats over and over again in the logs makes it less anomalous. We just consider how some combination of fields is more or less anomalous than some other. In summary, we do not consider the Time dimension.

The analysis of series of events or measurements versus time is the base of a very wide field in Data Science and Machine Learning called Time Series Analysis[1,2,3].

We will not dive in the Time Series world now, we will just focus on a type of Neural Networks that are able to include the time dimension in our analysis: Recurrent Neural Networks (RNNs). A RNN is a class of artificial neural networks where connections between nodes form a directed graph along a temporal sequence, allowing them to exhibit temporal dynamic behavior.[4]. In short, RNNs are able to “remember the past”.

There is a specific type of RNN which has shown the best results in this area, solving some of the problems of standard RNNs: the Long-Short Term Memory (LSTM) RNN[5] architecture. LSTMs are able to remember the past events (both short and long term) and the specific sequence in which those events happened, and at the same time they are resilient to the (real world problem) of missing events/measurements. These characteristics have made of LSTMs one of the most successful NN architectures for sequence analysis.

If you think about it, scheduled tasks event logs seem a perfect fit for this type of architecture, since they typically repeat over and over again in a more or less repeating sequence.

It is somewhat intuitive to understand that if a specific scheduled task appears over and over again with a regular pattern it will be less anomalous, and if another scheduled task that we have not seen before appears in the logs all of a sudden it will be more anomalous, specially if it does not repeat again.

As such, from our analytical point of view, our problem is a little more complex now: we still want to find anomalies, but not only in terms of the combination of different fields, but also in “sequencing” terms. That is, we want to identify event log entries that appear at a time and with a frequency/recurrency which does not correspond to past observations. We expect that the malicious Solarwinds scheduled task will have an anomalous behavior along these lines.

Ok, cool, you convinced me, Jess. I like this LSTM architecture. But… is there a way to combine our beloved Autoencoder (which is great at anomaly detection) with this LSTM architecture you mention?

The LSTM Autoencoder

Well, as you can imagine by the title of this section the answer to the previous question is… YES! We can indeed combine an Autoencoder architecture and a LSTM architecture.

At this point of the game you should be more than familiar with Autoencoders, their architecture and how they can be used to detect anomalies in a scheduled tasks event logs dataset, so I will not go back to that (if you are not, please check part 4 of this blog post series).

I would love to be able to post here an intuitive graph of how a LSTM Autoencoder architecture looks like, but it actually is not that easy to represent. I would say that you can simply think of a LSTM Autoencoder just like the traditional Autoencoder, but with “memory”.

Since we are dealing with a sequence of event logs, what we need to do is present each one of those events to the LSTM Autoencoder, so it can learn what events appear, and when and with what recurrence they appear. I will not give you here the specifics of how exactly that happens at the low level, I will explain that more in-depth in part 8 of this blog post series.

If we represent the reconstruction loss (error) of LSTM Autoencoders, similarly to what we did with the standard Autoencoders, but taking into account that we are dealing with repeating event logs (a sequence) now, this is how things would look like:

Going back to the “cat” scenario that we have been using in previous blog posts, the idea here is that we will not only try to identify if a cat is anomalous or not, we will also try to identify if the moment and recurrency in which we see the cat is anomalous or not. If we see a normal cat (one that we have seen before) at 2am in the morning, and it turns out that we have not seen it before at that time of the day, then this is an anomaly. In this case it is an anomaly not because this is an elephant or a strange cat, but because the recurrency and the timing are unusual.

Detecting the Solarwinds Malicious Scheduled Task via Event Log Analysis

Now that we understand LSTM Autoencoders, the next question is: how effective will a LSTM Autoencoder be in detecting the malicious Solarwinds scheduled task?

As you may remember from part 5 of this blog post series, we used 2 strategies:

- The first strategy was to run a standard Autoencoder in the scheduled tasks event log dataset, specifically over the unique scheduled tasks event log set (i.e. not considering sequence or repetition). And we did the same for the file system metadata associated to the scheduled tasks XML files under C:\Windows\System32\Tasks. Unfortunately, in both cases the the Solarwinds scheduled task was not classified as very anomalous and therefore we would have not been able to detect the attack this way.

- The second strategy was to use Artifact Pivoting. Under the assumption that the malicious Solarwinds scheduled task would be in the top 25% of the event log anomalies, we run the standard Autoencoder on the event log dataset and obtained the top 25% anomalous scheduled tasks names. And then we pivoted to the filesystem timeline, and run the Autoencoder again but only on those top 25% scheduled tasks that we have obtained in the first phase. This technique proved to be more effective and we managed to get the malicious Solarwinds scheduled task in the top 100 results. According to our original scenario, this would have allowed us to detect the attack, although very much in the limits. This means that if we scale up the number of hosts (our case study dataset was 100 servers) we might have not been able to detect it.

What we will do now is run the LSTM Autoencoder on all 30 days of scheduled tasks event log data, a total of 224.206 event log entries, and see in which anomaly position the malicious Solarwinds scheduled task appears.

Watch now the following short video to see how the LSTM Autoencoder classifies the 4 Solarwinds Scheduled Tasks events.

Well, honestly the results are amazing!

Which means that one of the 4 malicious scheduled tasks event log entries has been detected as the most anomalous event in the 224.206 event log entries dataset!

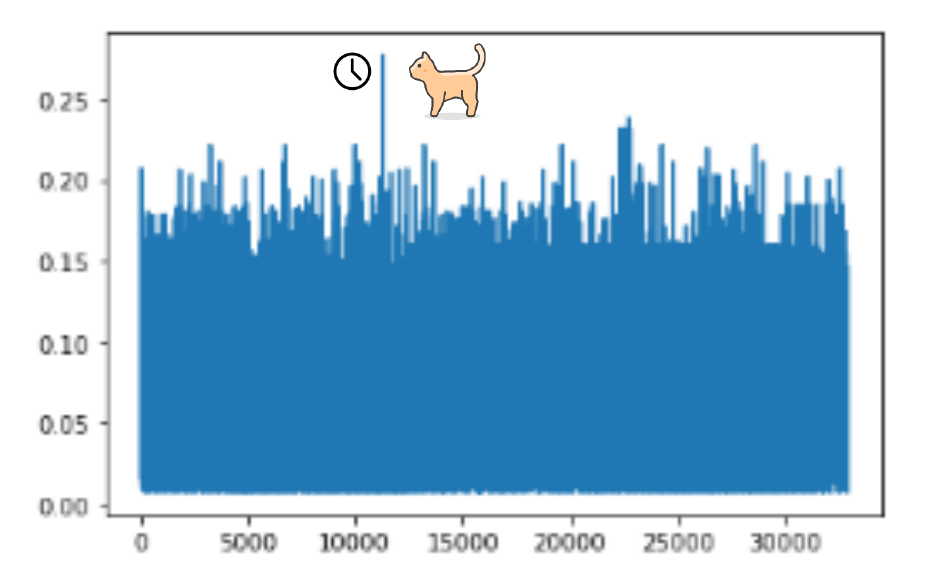

Let's take a closer look at how each of the 4 malicious event log entries were classified in terms of anomaly:

In this output, the first column shows the anomaly position of that specific scheduled task event log entry. We can therefore see that:

- The 2 events associated to the creation of the malicious Solarwinds scheduled task (Event IDs 106 & 140) appear as the top 2 most anomalous events (positions 0 and 1) out of 224.206 event log entries.

- The 2 events associated to the execution of the malicious Solarwinds scheduled task (Event IDs 200 & 201) appear a little further down the road, in positions 14.964 and 108.761 out of 224.206 event log entries.

In summary, the creation of the malicious Solarwinds scheduled task is the most anomalous event in the sequence of events in the 30 day event log dataset.

As a result, we can conclude that we would have been able to detect the attack if we were using this Threat Hunting technique back when the attack happened (well, that is in a best case scenario; please refer to the “Reality Bites” section at the end of part 2 of this blog post series for a realistic perspective in this sense).

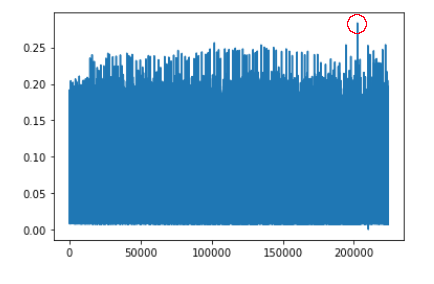

In the following loss graph, which represents the Autoencoder reconstruction error for each scheduled task event log entry in the dataset (from 0 to 224.205), you can clearly see a peak around the value 200.000 which corresponds to the 106 and 140 events discussed (entries 202.470 and 202.471):

Well that's the end of our trip! We have been able to find a Machine Learning architecture, the LSTM Autoencoder, which would have been able to detect the creation of the malicious Solarwinds scheduled task as the most anomalous event in a month of data for 100 servers. Not bad!

From here we could of course combine the different techniques presented so far to make them more effective in different scenarios or to provide different perspectives of the data. In all, the techniques presented make a powerful weapon for the Forensicator or Threat Hunter to be used when/as needed, which was our initial objective.

In the next parts of this blog post series we will go a little more in-depth in the Machine Learning side of things, so you can understand how things work under the hood. This way you would be able to adapt these techniques to similar problems even if not exactly the same that we have presented in this case. Obviously, the Autoencoder, the LSTM Autoencoder and the Artifact Pivoting techniques presented are very flexible and can be applied to a myriad of different situations.

Stay Tuned and contact us if you have any comment or question!

[ Full blog post series available here ]